Splunk: The $28 Billion Bet That Finally Gives Cisco a Data Operating System

Cisco spent $28 billion on Splunk—half their annual revenue—because they had best-in-class tools that couldn't talk to each other. This isn't just an acquisition. It's the data operating system that makes everything else possible: AgenticOps, unified observability, AI-driven automation.

Part of "Building Toward Amsterdam" - A public learning exercise ahead of Cisco Live EMEA 2026

Read the series: Why I'm Making the Bull Case • Silicon One: Cisco's Ethernet Bet • Hypershield: Security in the Data Path

Let's talk about the elephant in the room: Cisco spent $28 billion on Splunk—their largest acquisition ever, by a factor of two.

That's not a product buy. That's not even a market expansion play. That's a recognition that Cisco had a fundamental architectural gap in their portfolio, and they couldn't build their way out of it fast enough.

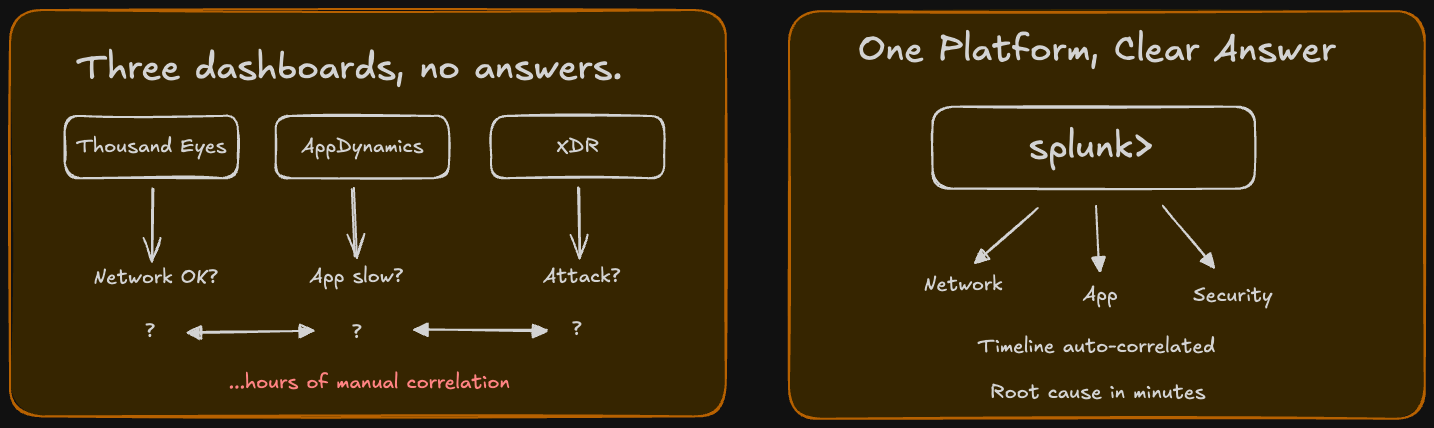

Here's the gap: For years, Cisco has had best-in-class point solutions. ThousandEyes sees network paths with incredible granularity. AppDynamics understands application performance. XDR detects security threats. Catalyst and Meraki manage network infrastructure.

But they all spoke different languages. Different data formats, different consoles, different operational models. A network engineer troubleshooting a performance issue in ThousandEyes couldn't easily correlate it with application metrics from AppDynamics or security events from XDR.

It's like having fluent speakers of five different languages in the same room but no translator. Everyone has valuable information, but nobody can connect the dots.

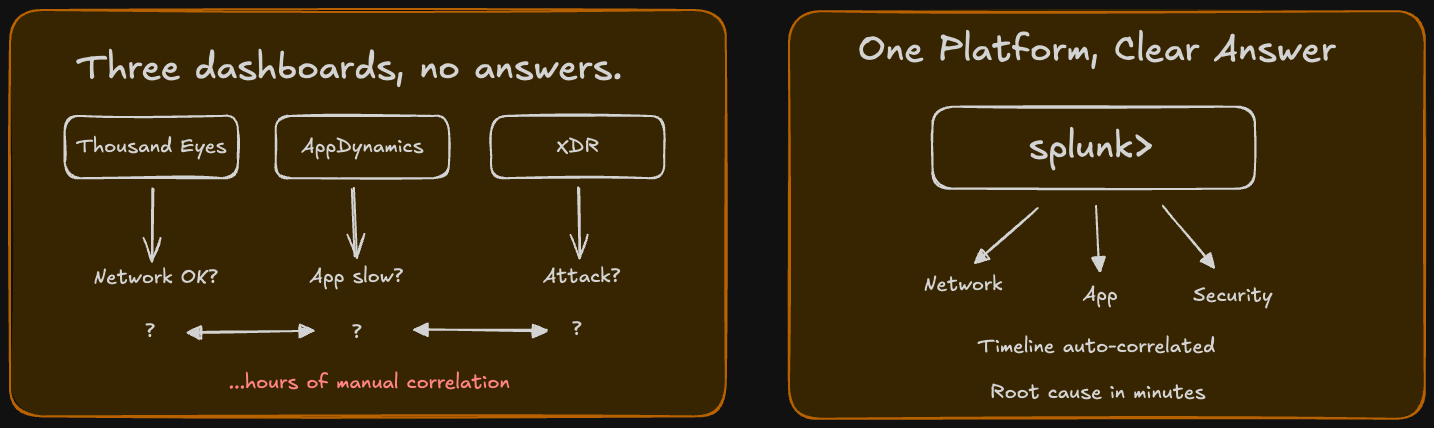

Splunk is the translator. More than that—it's the universal data substrate that lets all of Cisco's tools actually work together.

And if Cisco executes this right, it's not just an integration play. It's the foundation for everything else they're trying to build: AgenticOps, AI Canvas, unified observability, predictive intelligence.

Let me show you why this acquisition is either brilliant or a $28 billion mistake. There's not much middle ground.

The Portfolio Problem Cisco Couldn't Ignore

Here's what was broken, and why it mattered so much.

Imagine you're a network operations engineer. A critical application is slow. Users are complaining. You need to figure out what's wrong—fast.

You log into ThousandEyes. The network path looks fine—no packet loss, latency is normal, routing is optimal. So it's not the network... or is it?

You check AppDynamics. Application response time is elevated, but the code execution looks normal. Database queries are fast. So it's not the app... or is it?

You look at XDR. No active threats, no unusual traffic patterns. So it's not a security issue... or is it?

Now you're in a war room with three different specialists, each looking at their own telemetry, trying to manually correlate timestamps and infer relationships. Is the app slow because of a subtle network issue ThousandEyes didn't flag? Is there a security policy blocking specific traffic that XDR sees as normal? Is there a configuration change in the network that correlates with when the app degraded?

You spend hours stitching together clues from disparate systems. And even when you find the root cause, there's no automated way to prevent it next time because the systems don't share a common data foundation.

This is the problem. Not in one customer environment—in thousands of them. Cisco's portfolio breadth was both a strength (best-in-class tools) and a weakness (operational silos).

As Tom Casey, Splunk's Global SVP of Products and Technology, put it: Unlike some other Cisco acquisitions that took a long time to begin integrating, we started integrating from day one and did it differently, reorganizing all of the observability capabilities from Cisco into Splunk. (Splunk and Cisco integration moving apace)

That "day one" urgency tells you everything. This wasn't optional. This was existential.

What Splunk Actually Solves

Let's get specific about what Splunk brings to the table and why Cisco couldn't just build this themselves.

1. A Proven Data Platform at Massive Scale

Splunk already handles petabytes of data across security logs, infrastructure metrics, business analytics, and application telemetry. It ingests, indexes, correlates, and makes queryable virtually any machine-generated data.

Cisco's attempting to integrate their domain-specific telemetry—ThousandEyes network paths, Catalyst fabric data, Hypershield security events, AppDynamics application traces—as first-class Splunk data sources. (Cisco and Splunk Announce Integrated Full-Stack Observability Experience for the Enterprise)

The key architectural point: Splunk provides a unified schema. Instead of ThousandEyes data in one format, AppDynamics in another, and XDR in a third, everything gets normalized into a common structure where correlations are actually possible.

2. OpenTelemetry Support and Ecosystem

Splunk isn't a proprietary black box. It supports OpenTelemetry—the open-source standard for observability data—and has a massive connector ecosystem.

This matters because Cisco knows customers aren't going to rip out their existing monitoring tools. They might have Datadog for infrastructure monitoring, Prometheus for Kubernetes metrics, ELK for log aggregation.

The strategy is pragmatic: Bring your multi-vendor data to Splunk. We'll make Cisco gear a first-class citizen with deep integration, but we won't force you to throw away everything else. (March 2024: A New Day for Data: Cisco and Splunk)

It's an acknowledgment that the "single vendor soup-to-nuts" strategy doesn't work anymore. Customers want best-of-breed, but they also want integration. Splunk provides the integration layer.

3. The AI Training Ground

Here's where this gets strategically interesting.

AgenticOps—Cisco's vision for AI agents that proactively manage and troubleshoot infrastructure—requires rich, correlated data to be effective. Generic LLMs don't understand your specific network topology, application dependencies, or security policies.

Splunk becomes the data corpus for training Cisco's Deep Network Model. Network performance context, security posture, application health, infrastructure state—all timestamped, correlated, and contextualized.

That's what makes domain-specific AI actually useful versus generic chatbots that lack operational context. (Insights From Cisco Live 2024: Splunk Integration, Security (And More Security), And AI Pragmatism)

Without Splunk, AgenticOps is vaporware. With Splunk, it becomes a platform play: AI that understands your entire technology stack because it has unified telemetry from all of it.

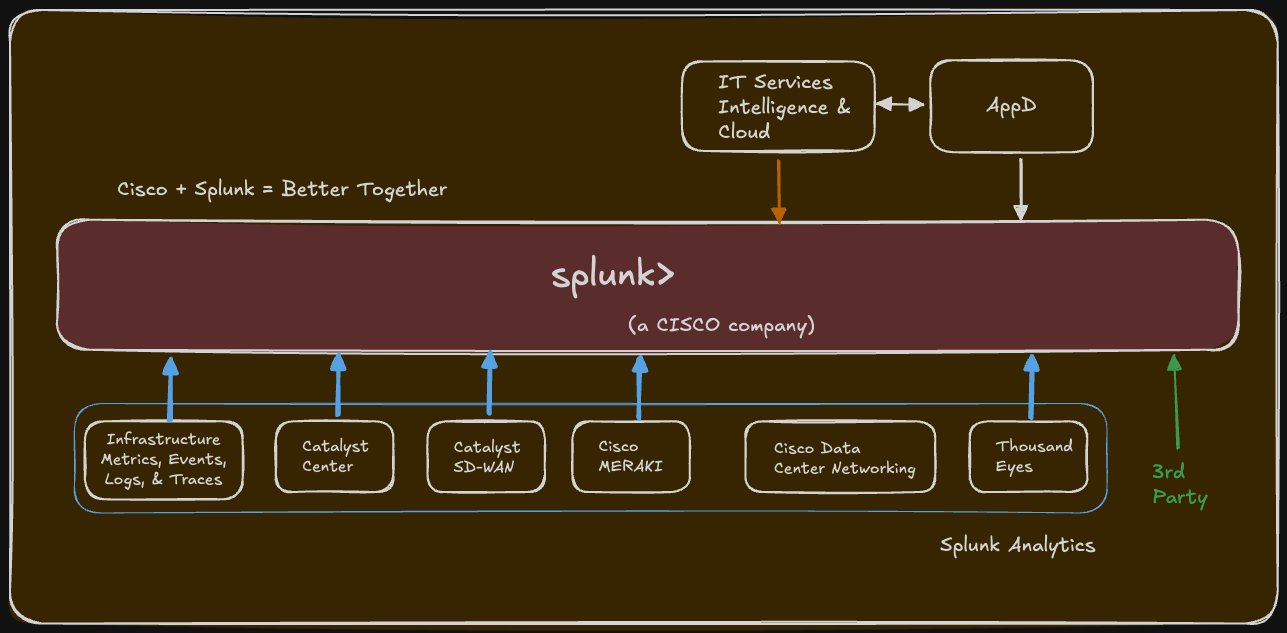

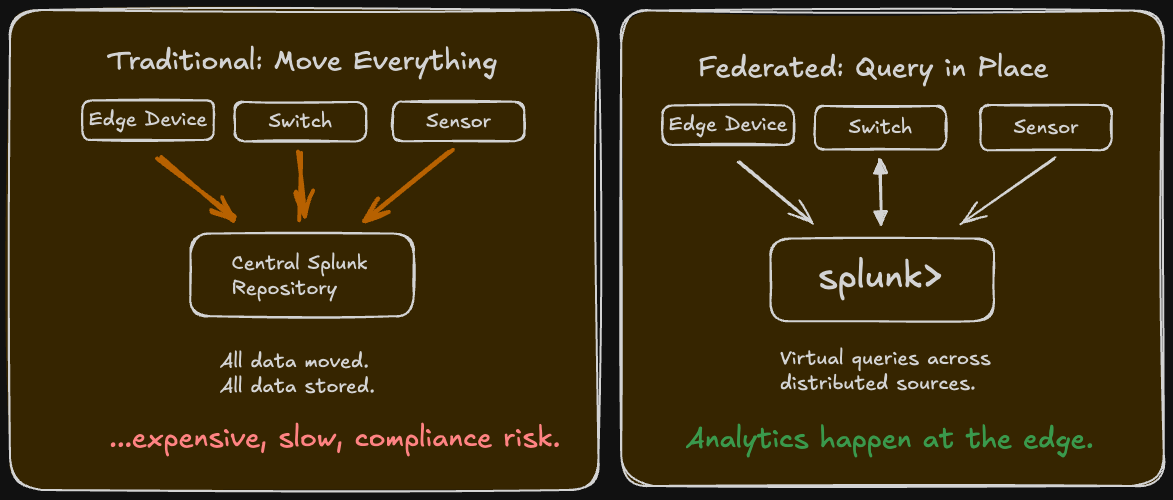

The Cisco Data Fabric: Federated Intelligence

At Cisco Live 2025, Cisco unveiled the "Cisco Data Fabric"—a strategic architectural direction that shows how they're thinking about the Splunk integration long-term. (Cisco-Splunk strategy shift unveiled with Data Fabric)

This is important because it signals a shift away from centralized data ingestion toward federated analytics.

The traditional approach: Move all data into one central repository (Splunk), analyze it there, and present results.

The federated approach: Leave data where it lives (on network devices, in edge systems, in cloud storage), but virtualize it so analytics can run across distributed sources without moving everything.

Why does this matter?

Cost: Moving petabytes of telemetry from network devices to a central repository is expensive—both in bandwidth and storage costs.

Compliance: Some data legally can't leave certain geographic regions or security boundaries. Federated analytics lets you analyze it in place.

Latency: For real-time decisions (like Hypershield enforcement or network path optimization), you can't wait for data to be centralized, processed, and returned. Edge processing with federated queries is faster.

As industry analyst Torsten Volk from Enterprise Strategy Group notes: The Cisco Data Fabric provides Splunk with access to machine data from all kinds of network equipment that its competitors cannot access, as a lot of that data never leaves its device for cost or compliance reasons.

This is the moat. Cisco's networking infrastructure generates telemetry that other observability vendors can't easily reach. By processing signals at the edge and combining them with traditional operations data, they unlock insights competitors simply can't replicate.

The architecture in practice:

- Network telemetry stays on Catalyst switches, but Splunk can query it via APIs

- ThousandEyes path data remains in regional data centers for compliance, but federated search correlates it with app performance

- Hypershield enforcement telemetry is processed locally for real-time decisions, but aggregated patterns feed back to Splunk for trend analysis

It's not "centralize everything." It's "analyze everything, wherever it lives."

This is sophisticated infrastructure thinking, and it only works because Splunk has the platform capabilities to support it.

Integration Progress: What's Shipping vs. What's Roadmap

Let's get concrete about what's actually integrated today versus what's still promised.

Shipped (2024-2025):

30 Splunk Technical Add-Ons Updated: Cisco immediately prioritized updating Splunk's connectors to "gold standard" quality, ensuring data from Cisco products integrates cleanly. (Cisco EVP shares progress on Splunk integration)

XDR Integration with Splunk SIEM: High-fidelity alerts and detections from Cisco XDR now feed directly into Splunk Enterprise Security. Security analysts can investigate XDR alerts within their existing Splunk workflows.

Log Observer Connect for AppDynamics: Application logs and metrics from AppDynamics flow into Splunk Observability Cloud, giving developers and operations teams correlated visibility.

Cisco Talos Threat Intelligence Integration: Splunk users can now access Cisco Talos threat data natively, improving threat detection without switching tools.

In Progress (2025-2026):

ThousandEyes Bidirectional Integration with Splunk ITSI: Network path data from ThousandEyes correlates with Splunk IT Service Intelligence for service health monitoring. If a service degrades, ITSI can immediately show network path issues from ThousandEyes as potential causes. (Splunk + Cisco ThousandEyes: New Integration for End-to-End Digital Resilience)

Deeper XDR Capabilities: Enhanced correlation between network detection (NDR), endpoint detection (EDR), and SIEM for comprehensive threat hunting.

Unified AI Assistants: Cisco's vision is a single AI assistant across security and observability that understands context from both domains. This requires the data integration to mature first.

Future Roadmap:

Full Data Fabric Implementation: Complete federated analytics across all Cisco telemetry sources, with edge processing and centralized orchestration.

AgenticOps at Scale: AI agents that can autonomously troubleshoot and remediate issues across network, security, and application domains using unified Splunk telemetry.

The honest assessment: The foundational integrations are shipping. The transformative vision is still being built. Cisco's moving methodically, which is smart—rushing integration often breaks things. But it also means the "fully unified platform" story is 2-3 years from complete realization.

Why This Couldn't Be Built In-House

Here's the uncomfortable question: Why spend $28 billion instead of building this capability internally?

Cisco tried. For years, they worked on unifying their telemetry and creating common data models. It never fully worked because:

1. Platform Maturity Takes Time

Splunk has spent 20 years building a data platform that scales to petabyte-level ingestion with sub-second query performance. That's not something you replicate quickly. The indexing algorithms, distributed architecture, query optimization—this is deep technical debt that accumulates over decades.

2. Ecosystem Lock-In

Splunk has 15,000+ apps and integrations in its ecosystem. Security teams already use Splunk Enterprise Security. Operations teams use Splunk Observability Cloud. Asking them to migrate to a Cisco-built alternative is a multi-year, high-risk change management project.

Buying Splunk means inheriting that installed base and ecosystem immediately.

3. Talent and Culture

Splunk employs some of the world's best experts in large-scale data processing, observability, and security analytics. Hiring comparable talent and building institutional knowledge would take years—time Cisco doesn't have as the AI transformation accelerates.

4. Market Urgency

Competitors are moving fast. Datadog is expanding into security. Palo Alto is building observability into their security platform. The convergence of observability and security is happening whether Cisco participates or not.

The $28B bet is essentially: "We can't afford to be late to this architectural shift, and building it ourselves would take too long."

The Customer Perspective: What Actually Changes

Let's talk about what this means for someone actually using Cisco and Splunk products.

If you're a Splunk customer:

You now have native access to Cisco's domain expertise—Talos threat intelligence, ThousandEyes network insights, Hypershield telemetry—without leaving Splunk. Your existing Splunk skills and workflows stay relevant; you're just getting better data sources.

If you're a Cisco customer:

You gain a unified data platform for correlation. Instead of three tools with three dashboards, you can investigate issues in Splunk with all the context from network, app, and security telemetry in one place.

If you're both:

This is the target state—Cisco gear generating rich telemetry, Splunk providing the analytics and intelligence layer, and AI agents (AgenticOps) automating response based on correlated insights.

The practical example:

A user reports slow application performance. Instead of manually checking:

- ThousandEyes (network path)

- AppDynamics (app performance)

- Catalyst analytics (network fabric)

- XDR (security events)

An AI agent trained on unified Splunk data automatically correlates:

- Application response time spike at 10:23 AM

- Network path latency increase on specific route at 10:22 AM

- Configuration change on Catalyst switch at 10:21 AM

- No security events (rules out attack)

Root cause identified: The config change created a suboptimal routing path. The agent suggests a remediation (revert config or adjust routing policy), and with approval, executes it. Problem resolved in minutes instead of hours.

That's the vision. Whether it actually works that smoothly in practice is what we'll learn over the next 12-18 months.

The Financial Reality: A $28B Risk

Let's acknowledge the elephant: This is a massive financial bet.

Cisco's total annual revenue is around $55-60 billion. They spent half a year's revenue on one acquisition. That's not casual M&A—that's strategic desperation.

The bull case:

Splunk revenue was ~$4 billion annually pre-acquisition. If Cisco can:

- Accelerate Splunk growth through cross-sell (Cisco's massive customer base)

- Extract cost synergies (eliminate duplicate functions, consolidate infrastructure)

- Drive higher-value deals (sell integrated observability + networking + security platforms)

Then the acquisition pays for itself in 7-10 years and repositions Cisco for secular growth in observability and AI operations.

The bear case:

Integration is hard. Cisco's acquisition history is mixed (Meraki succeeded, AppDynamics struggled, SourceFire got absorbed). If:

- Splunk revenue growth stalls (existing customers churn, new customer acquisition slows)

- Integration takes longer than expected (promised synergies don't materialize)

- Competitors (Datadog, Dynatrace, Elastic) outmaneuver Cisco in market positioning

Then this becomes a $28B albatross that weighs down Cisco's balance sheet and limits flexibility in a changing market.

My read:

The strategic logic is sound. Cisco genuinely needed a unified data platform, and building it in-house wasn't feasible. But execution risk is enormous. Watch for:

- Revenue retention: Is Splunk holding onto existing customers or seeing churn post-acquisition?

- Cross-sell traction: Are Cisco sales teams actually selling Splunk to network customers, or are the portfolios still siloed?

- Product integration depth: Are we seeing genuine platform capabilities or just API-level connections?

The financial success of this acquisition won't be clear until 2026-2027. But the strategic direction—unified data as the foundation for AI-driven operations—is directionally correct regardless of whether the specific bet on Splunk pays off.

Amsterdam Preview: Proof Points to Watch For

At Cisco Live Amsterdam in February 2026, here's what tells us if this is working:

Joint Customer Success Stories:

Not "we use Cisco networking and Splunk separately" but "we deployed integrated observability and cut MTTR by 60% because we could correlate network, app, and security events in real-time." Quantified outcomes with named customers.

Platform Demonstrations:

Live demos showing an issue detected in one domain (network degradation in ThousandEyes) automatically triggering investigation across other domains (app performance in AppDynamics, security posture in XDR) with Splunk as the correlation engine. Not slides—actual working systems.

Data Fabric Architecture Sessions:

Technical deep-dives on how federated analytics actually work. How does edge processing on Catalyst switches feed into Splunk queries? What's the latency? What are the data governance controls?

AgenticOps Use Cases:

Show AI agents using Splunk data to autonomously troubleshoot and remediate issues. What's the accuracy rate? What's the false positive rate? When do humans need to intervene?

Revenue Synergy Signals:

Cisco executives discussing specific cross-sell wins. "We closed 200 deals in 2025 where Splunk was the wedge into security/observability expansion" or "Cisco networking customers are adopting Splunk Observability at 2x the rate of the general market."

The technology integrations are happening. Whether they translate into business outcomes and customer value—that's the question Amsterdam should answer.

How This Anchors Everything Else

Let's connect this back to the broader transformation story we've been building:

Silicon One generates network telemetry → feeds into Splunk for correlation

Hypershield enforcement events → logged in Splunk for threat analysis

ThousandEyes path data → correlated in Splunk with app performance

AppDynamics traces → unified in Splunk with infrastructure metrics

XDR security alerts → enriched by Splunk correlation across domains

AgenticOps AI agents → trained on Splunk data corpus for context-aware automation

Splunk isn't a product. It's the connective tissue.

Without it, Cisco's portfolio remains best-of-breed point solutions that don't fundamentally work together. With it, they have a platform story where unified data enables AI-driven operations.

The $28 billion bet is essentially: "The future of enterprise IT is AI agents managing infrastructure autonomously. Those agents need unified, correlated data to be effective. Splunk provides that data substrate. Everything else we're building depends on this foundation being solid."

High stakes. Huge execution risk. But strategically coherent.

The Bottom Line

Here's what Cisco's actually saying with the Splunk acquisition: We can't compete in the AI era with siloed tools that don't share data. Unified telemetry isn't a nice-to-have—it's the prerequisite for intelligent operations.

The technology bet—Splunk as the universal data platform for networking, security, and observability—is credible. The architecture (federated analytics, OpenTelemetry support, massive ecosystem) is sound.

The financial bet—$28 billion can be recouped through cross-sell, cost synergies, and platform value creation—is TBD. Integration execution will determine whether this is brilliant or a cautionary tale.

But the strategic direction is clear and, I think, correct: The moat in enterprise IT is shifting from "best individual products" to "best integrated platform with unified data and AI-driven intelligence."

Cisco either builds that platform or becomes irrelevant. They chose to buy the foundation (Splunk) rather than build it. Whether that foundation is strong enough to support everything they're constructing on top of it—AgenticOps, AI Canvas, unified observability, security convergence—we'll know by Amsterdam.

And if it works? This isn't just an acquisition. It's the data operating system that Cisco should have built 10 years ago, finally in place just in time for the AI transformation.

Your Take?

Are you using Splunk in your environment? If Cisco can actually deliver unified correlation across network, security, and application data, does that change your monitoring/troubleshooting workflows in meaningful ways?

And for those already using both Cisco infrastructure and Splunk: Are you seeing the integration benefits yet, or is it still mostly separate tools with loose API connections?

I'm especially curious to hear from anyone who's had to manually correlate data across ThousandEyes, AppDynamics, and security tools—does the Splunk Data Fabric approach solve a real problem you're facing?

Next in the series: AgenticOps and the Deep Network Model—what happens when you train a domain-specific LLM on all that unified Splunk data, and whether AI agents can actually manage infrastructure autonomously or if we're just automating our way into new categories of failure.

Key References:

About this series: I'm building toward Cisco Live Amsterdam in February 2026 by making sense of Cisco's biggest strategic moves. This is part learning exercise, part knowledge sharing. I'll be hosting the Cisco Live broadcast again this year, and I want to show up with a clear understanding of the storylines Cisco's building. If something here resonates—or if you think I'm missing the mark—let's talk about it.

- Computer Weekly - Splunk Integration Moving Apace ↩︎

- Cisco Investor Relations - Full-Stack Observability Announcement ↩︎↩︎↩︎↩︎

- Splunk Blog - New Day for Data: Cisco and Splunk ↩︎

- Forrester Analysis - Cisco Live 2024 Insights ↩︎

- TechTarget - Cisco Data Fabric Strategy ↩︎

- TechTarget - Torsten Volk Analysis ↩︎

- SDxCentral (May 10, 2024) - Integration Progress Update ↩︎↩︎

- Cisco Blog (June 10, 2025) - ThousandEyes Integration↩︎