AgenticOps & AI Canvas: When AI Actually Understands Your Network

Generic AI knows networking theory. Domain-specific AI knows your network. Cisco's AgenticOps is built on unified telemetry—and that changes everything about how infrastructure gets managed.

Part of "Building Toward Amsterdam" - A public learning exercise ahead of Cisco Live EMEA 2026

Read the series: Why I'm Making the Bull Case • Silicon One: Cisco's Ethernet Bet • Hypershield: Security in the Data Path • Splunk: The $28B Data Operating System

Generic AI can describe what a network is. A domain-specific AI—trained on your own telemetry—can tell you why your network is slow right now and how to fix it. That’s not a subtle distinction; it’s the difference between reading a manual and talking to the engineer who built your system.

The real question is bigger: Can AI agents actually manage network and security infrastructure on their own? Not just answer questions or generate configs, but detect issues, correlate causes across domains, and execute safe remediations.

Cisco believes they can—and it’s pursuing a specific path to get there: AI built from unified telemetry, not a generic LLM pretending to understand networking. That’s where the Deep Network Model and AgenticOps come in.

In this post, I’ll break down what Cisco is actually building with AgenticOps and AI Canvas, why the Splunk integration makes it credible instead of vaporware, and what would prove it’s real versus just clever AI-washing.

The Problem: Generic AI Doesn't Know Your Network

Most “AI for IT” solutions disappoint for a simple reason: they know about networking, not your network.

Large language models are trained on internet-scale data. They can recite BGP theory, describe OSPF routing, or generate Python scripts for automation. They’re encyclopedic—useful for study sessions, not for live operations.

Ask a generic model, “Why is application X slow right now?”

You’ll get textbook speculation:

“Could be congestion, an app bottleneck, or database latency. Try these troubleshooting steps…”

Helpful background. Zero context.

Now imagine an AI that already knows:

- Your actual topology and how traffic flows between sites

- Real-time utilization on every link, switch, and router

- Application dependencies and the network paths they traverse

- The timestamp of each configuration change

- Historical performance baselines for comparison

- Security policies that could influence routing

- Ongoing incidents and their relationships

Ask that AI the same question, and it replies:

“Application X latency increased 340 ms at 10:23 AM. Root cause: a config change on router Y at 10:21 AM forced traffic onto a congested link. SD-WAN policy doesn’t prioritize interactive traffic on that path.

Remediation: revert the change or update QoS to deprioritize batch workloads during business hours.”

One answer quotes the manual. The other understands your environment.

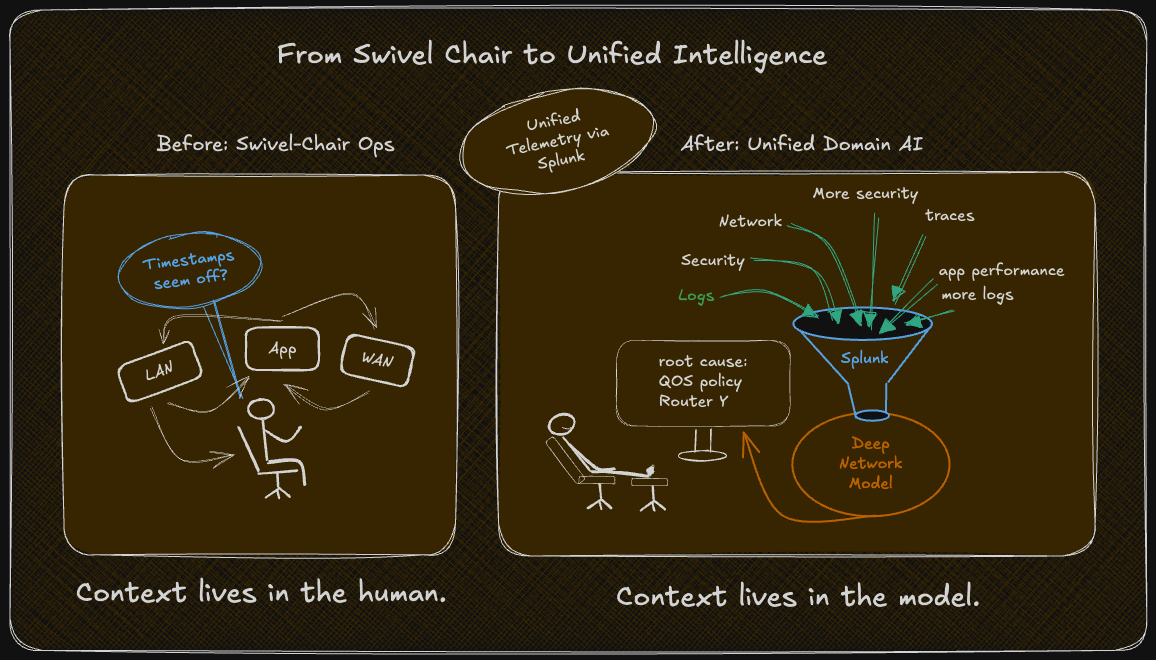

That’s the gap Cisco is trying to close. The swivel-chair era where humans act as the integration layer.

The Deep Network Model: Domain AI That Earns Its Name

At Cisco Live 2025, Cisco introduced what they're calling the Deep Network Model—their domain-specific large language model trained specifically on network and security telemetry.

This isn’t just a marketing term. The architecture genuinely differs from a generic LLM in three key ways:

1. Training Data That Reflects Reality

Instead of scraping the public internet, the Deep Network Model learns from telemetry produced by Cisco’s own platforms:

- Network devices — Catalyst, Meraki, Nexus, routers

- Security systems — XDR, Hypershield, firewall logs

- Application observability — AppDynamics traces

- Network path visibility — ThousandEyes

- Infrastructure logs — Splunk-ingested data

- Configuration management — changes, rollbacks, approvals

The model doesn’t just ingest this data; it learns the relationships between them. When application latency spikes, which network events correlate? When a security rule changes, how do traffic patterns shift? When a link saturates, which applications are hit first?

These are not theoretical relationships—they’re patterns drawn from real operational behavior.

2. Contextual Awareness

Because it’s trained on unified telemetry, the model understands the shape of your environment:

- Which apps depend on which paths

- What “normal” traffic looks like for your business

- How changes in one domain (like routing) ripple into others (like app performance or security posture)

- Organizational policies and operational boundaries

This context transforms the AI from a smart chatbot into a system that actually knows your infrastructure.

3. Continuous Learning

The longer it observes your environment, the smarter it gets:

- Learns seasonal or diurnal traffic patterns

- Recognizes normal post-change recovery behavior

- Distinguishes between recurring noise and true anomalies

- Predicts degradations before they hit users

Over time, it evolves from reactive analyst to predictive operator.

In short, the Deep Network Model aims to turn generic intelligence into operational intuition—AI that not only understands networks, but understands yours.

The Key Dependency: Why This Requires Splunk

A domain-specific model is only as strong as the data it sees.

If network telemetry lives in one silo, security events in another, and application metrics in a third—with different formats, timestamps, and correlation keys—training a unified model isn’t just hard; it’s almost impossible.

That’s where Splunk becomes the linchpin. (Here's where the previous post about Splunk connects directly).

Splunk provides the universal data substrate that makes a domain AI feasible:

- Normalized schema across all data sources

- Unified timestamps for precise event correlation

- Consistent indexing for fast queries

- Context preservation—not just raw metrics, but relationships between them

Without Splunk, AgenticOps would be just another chatbot poking APIs on network devices.

With Splunk’s unified data fabric, those same agents gain true cross-domain reasoning—seeing how a routing change affects app latency or how a security rule alters traffic flow.

The Splunk integration isn’t an add-on.

It’s the foundation that gives the Deep Network Model situational awareness and turns AI Canvas from a query interface into an operational control surface.

AI Canvas: Where Intelligence Becomes Operational

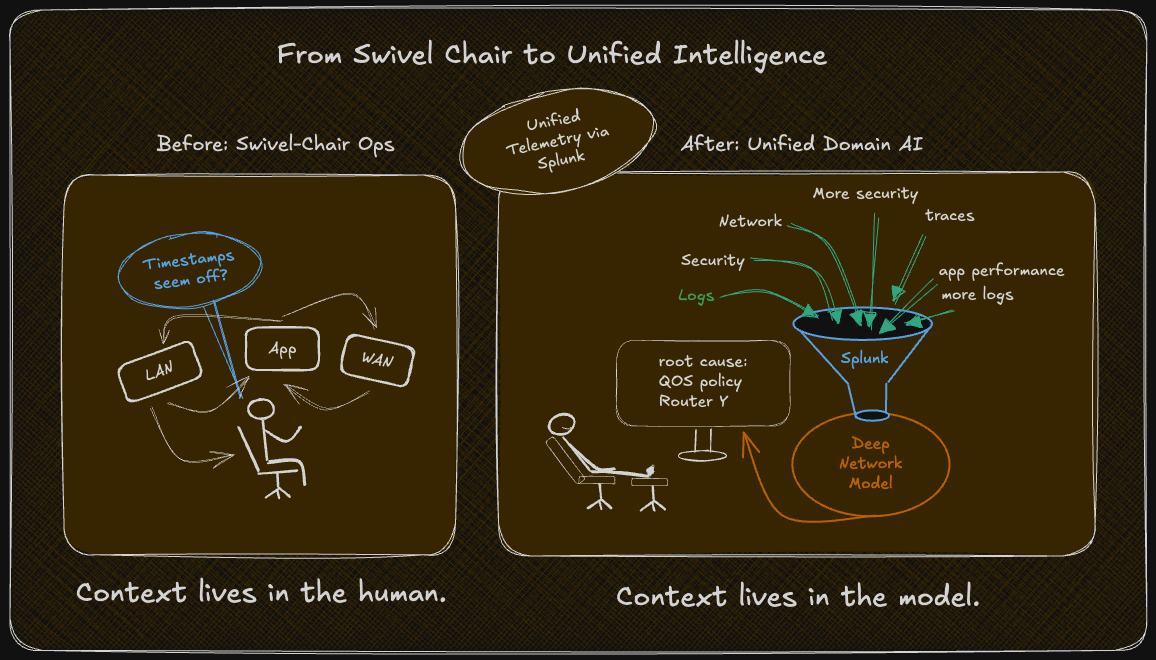

At Cisco Live 2025, Cisco unveiled AI Canvas—the interface where the Deep Network Model and AgenticOps agents actually manifest for network and security operations teams.

Think of it as a visual workspace that turns natural language into infrastructure insight. Instead of jumping between dashboards, you ask questions and watch the system build the context for you.

From Queries to Correlations

You might ask:

- “Show me all applications affected by the outage last Tuesday.”

- “What happens if I take switch X offline for maintenance?”

- “Which security policies are blocking traffic to the new database?”

- “Why did latency spike after yesterday’s firewall change?”

The AI Canvas workflow looks like this:

- Parse the question — understand intent and scope.

- Query relevant telemetry via the Splunk data fabric.

- Reason across domains using the Deep Network Model.

- Generate visualizations—topology maps, dependency graphs, correlation timelines.

You’re not building dashboards or writing queries.

You’re asking operational questions, and the system decides how to answer them—pulling from all your network, application, and security data.

The Demo That Made People Pay Attention

In one live demo, an engineer asked:

“What would happen if I take switch X offline for maintenance?”

AI Canvas instantly:

- Identified all applications traversing that switch.

- Calculated redundancy and available failover capacity.

- Flagged three apps that would experience degradation.

- Suggested a lower-traffic maintenance window.

- Generated a rollback-ready maintenance plan.

That’s not a scripted Q&A.

It’s multi-domain reasoning—understanding topology, utilization, dependencies, and consequences, then producing an actionable plan.

If this works consistently in real customer environments, it’s not just smarter monitoring—it’s the first step toward autonomous infrastructure operations.

Cross-Domain Correlation: Seeing the Whole Picture

The real power of AI Canvas appears when problems cross boundaries—when network, application, and security layers all play a part.

A user reports that the CRM application is slow. Traditionally, operations would start a manual war room:

- Check ThousandEyes for network path issues → looks fine.

- Check AppDynamics for app bottlenecks → database queries look slow.

- Check the database directly → no issue found.

- Check security logs → nothing obvious.

Hours vanish stitching data together.

How AI Canvas Handles the Same Scenario

You ask:

“Why is the CRM application slow?”

AI Canvas, powered by the Deep Network Model, queries the unified Splunk fabric and responds:

- Application response times spiked at 10:23 AM.

- Network path latency increased one minute earlier.

- A configuration change on a Catalyst switch occurred at 10:21 AM.

- No correlated security events detected.

- Historical baseline shows typical latency of 15 ms, now 340 ms.

The model reasons through causality:

- Timing correlation: config change → latency increase → app degradation.

- Topology analysis: routing change forced traffic through a congested link.

- Root cause: suboptimal path selection from a misapplied configuration.

Recommendation:

“Revert the configuration on switch Y or adjust QoS to prioritize interactive traffic on that path.”

Symptom → Root cause → Remediation—in seconds, not hours.

And across every domain that matters.

This is the difference between dashboards and diagnosis.

AI Canvas doesn’t just surface metrics—it connects dots humans can’t connect fast enough.

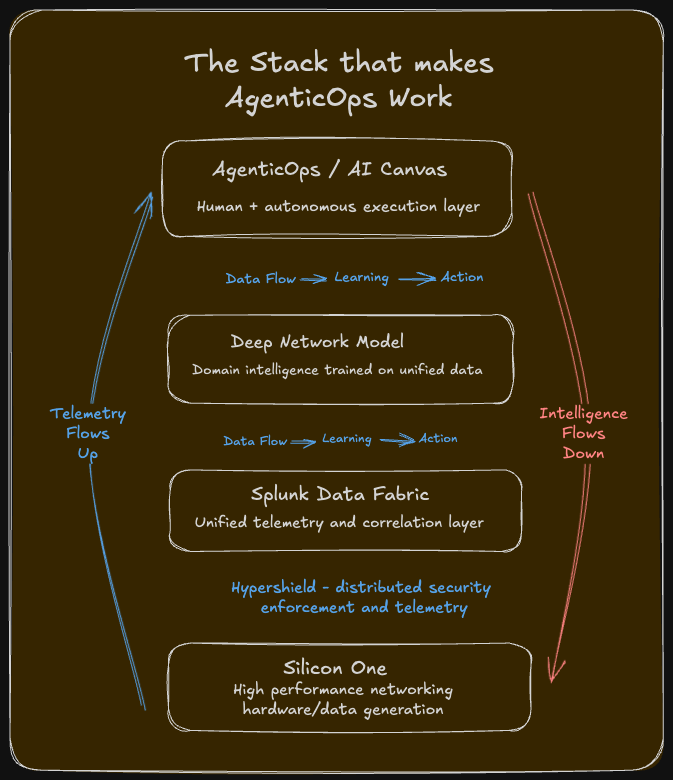

AgenticOps: From Chatbot to Autonomous Agent

Here’s where things get interesting—and where the real risk lives.

AI Canvas is the surface where humans interact with intelligence. AgenticOps is the layer beneath it: a framework of AI agents designed to act, not just analyze.

The Agent Hierarchy

Cisco’s building a tiered agent ecosystem:

- Monitoring Agents watch continuously, detecting anomalies and correlating events across network, security, and application domains.

- Advisory Agents recommend remediations or optimizations based on live telemetry and contextual risk.

- Execution Agents actually make changes—tuning QoS policies, rerouting traffic, updating security rules.

In other words, these aren’t chatbots. They’re AI-driven operators capable of taking real actions, under human-defined trust boundaries.

Graduated Autonomy: A Smart Design Choice

Cisco’s rollout strategy acknowledges what every operations team already knows: trust has to be earned.

So rather than jumping from “AI observes” to “AI controls” overnight, AgenticOps introduces five levels of autonomy:

| Level | Mode / Behavior |

|---|---|

| 1. Observe | Detects issues, alerts humans, provides analysis only — all actions remain manual. |

| 2. Recommend | Proposes remediations; humans review and execute. |

| 3. Assisted Execution | Executes with real-time human supervision and the ability to abort. |

| 4. Autonomous Execution | Executes automatically for low-risk cases; humans review afterward. |

| 5. Full Autonomy | Handles detection, diagnosis, remediation, and validation end-to-end. |

Most organizations will operate comfortably in Levels 2–3 for years. Levels 4–5 remain aspirational until the system proves both reliable and explainable.

It’s a pragmatic approach. Cisco isn’t promising an instant leap to “self-driving infrastructure.” It’s building the trust ladder first.

To see how this actually operates in practice, let's walk through a full incident cycle.

What an AI-Driven Workflow Actually Looks Like

Let’s run through a full incident cycle using AgenticOps:

- Detection:

A monitoring agent flags degraded performance—customer portal latency up from 200 ms to 2,400 ms in 15 minutes. - Correlation:

The advisory agent queries the Splunk data fabric and discovers a QoS policy change 17 minutes ago on branch routers. - Root Cause Analysis:

The Deep Network Model reasons across telemetry: the new policy deprioritized interactive traffic, queueing customer sessions behind backup jobs. - Remediation Plan:

The advisory agent generates two safe options:- Revert the QoS policy on branch routers

- Update the policy to exempt portal traffic from deprioritization

Both are low-risk and fully reversible.

- Execution:

At Level 2, the engineer reviews and approves the plan.

At Level 4, the AI executes automatically, logging every step for audit. - Validation:

Within two minutes, latency returns to baseline. The incident closes. The post-incident summary is stored for continuous learning.

The intelligence lives in steps 2–4—correlating disparate data, reasoning about causality, and proposing context-aware remediations.

That’s where the Deep Network Model earns its keep. Most automation can execute a playbook; very little can reason through a new situation and adapt safely.

Why Domain Expertise Still Matters

Here’s the simplest way to show the difference between generic and domain-specific AI.

Generic LLM:

“Application slowness can result from many causes—network latency, CPU load, database queries. Try these 12 troubleshooting steps…”

Domain AI (Deep Network Model):

“Application X response time increased from 180 ms to 2.1 s at 14:37 UTC.

Network path latency normal.

Database query time elevated—CPU spike at 14:35 UTC from scheduled REINDEX job.

Root cause: maintenance job scheduled during business hours per change #CH-8844.

Recommendation: pause the job now, reschedule after 18:00 UTC. Expected recovery: 30 seconds.”

One is knowledgeable. The other understands your environment.

That difference is the whole point of AgenticOps.

What’s Actually Shipping vs. What’s Still Roadmap

It’s easy to get swept up in Cisco’s AgenticOps vision, so let’s pause and separate what’s real now from what’s still roadmap marketing.

Because for all the talk about “AI-driven operations,” credibility hinges on one thing: what’s actually running in production.

Shipping Now (as of Q3 2025)

- AI Assistants Across Cisco Products

Natural language query interfaces are now embedded in Security Cloud Control, Networking Cloud, and Splunk.

You can ask plain-language questions—“show me all high-latency links” or “list top policy violations”—and get contextual results.

These assistants are still limited to single-domain reasoning, but they prove the interface pattern works. - Splunk AI Assistant

Integrated directly into Observability Cloud and Enterprise Security, it helps operators craft and interpret complex queries, highlight anomalies, and explain correlations.

It’s not “AgenticOps,” but it’s the foundational layer that teaches users—and the system—how to reason together. - Monitoring Agents (Read-Only Mode)

AgenticOps’ first generation of agents are already deployed in select customer environments.

They’re observing infrastructure and generating insights from unified telemetry, but not yet executing changes.

This stage builds the data corpus and correlation accuracy that later autonomy levels will depend on.

In Limited Preview (Select Customers, Q4 2025)

- AI Canvas with Cross-Domain Visualization

The full interactive workspace is now in controlled trials. It lets users query infrastructure across domains—network, security, app performance—and watch the AI generate dependency maps and correlation graphs in real time.

It’s the first genuine step toward “ask the network” operations. - Advisory Agents (Level 2 Autonomy)

These agents generate remediation plans based on live analysis.

Humans still execute manually, but the recommendations are no longer boilerplate—they’re contextual and explainable.

This is the proving ground for trust: if the AI’s advice consistently solves problems, autonomy can safely expand.

Still Roadmap (2026 and Beyond)

- Execution Agents (Level 3–4 Autonomy)

These will be the first agents allowed to make low-risk, reversible changes—adjusting QoS, rerouting traffic, enforcing updated security rules.

They’re being tested under strict supervision with rollback safeguards. Broad release depends on demonstrated accuracy and predictable behavior. - Full AgenticOps Lifecycle (Level 5 Autonomy)

The complete end-to-end vision—AI detecting, diagnosing, remediating, and validating incidents without human touch—is still aspirational.

Cisco isn’t pretending it’s here today, but the architecture is being laid for when confidence and validation metrics reach critical mass. - Deep Network Model Continuous Learning

The long-term goal is a model that adapts to each customer’s environment, learning baseline behaviors, seasonal patterns, and organizational priorities.

Today’s version learns globally from Cisco data; tomorrow’s will personalize locally.

The honest read: Cisco is moving carefully—and that’s a good thing.

You can’t fake operational reliability in live networks.

The groundwork is solid: data unification through Splunk, explainability through AI Canvas, and human-in-the-loop guardrails through graduated autonomy.

The autonomous future isn’t ready for prime time yet, but Cisco’s building toward it methodically instead of chasing hype.

And that restraint might be the most credible signal of all.

The Trust Problem: How Do You Validate AI Decisions?

The real challenge isn’t the tech — it’s trust.

Diagrams don’t build it; results do.

Every operations team eventually asks the same question:

“How do I know the AI is making good decisions?”

Traditional automation is deterministic: you define rules, you test them, and you know exactly what happens when they run.

AI-driven systems—especially ones powered by large models—don’t always show their work in ways humans can easily interpret. They infer causes, correlate symptoms, and recommend actions, but the why behind those conclusions can be opaque.

Cisco seems to understand that trust isn’t a product feature; it’s a process. Their design for AgenticOps builds transparency, validation, and human oversight directly into how autonomy evolves.

1. Explainability

Every recommendation comes with context.

Each agent output includes the data sources consulted, the correlations identified, the alternatives considered, and the confidence level behind the conclusion.

It’s not just what the system decided—it’s why.

That visibility makes the AI auditable instead of mysterious, which is essential for operators who need to verify cause and effect before letting an agent touch production infrastructure.

2. Validation Before Execution

At lower autonomy levels (1–3), humans stay firmly in control.

Agents propose remediations, but operators review and execute manually.

Each outcome—whether accepted, rejected, or adjusted—feeds back into the learning loop. Over time, this creates a performance record: what worked, what didn't, and where the AI misread context.

Over time, this becomes a record of performance: how often recommendations were correct, what worked, what didn’t, and where the AI misread context.

That validation history is how confidence is built—not through promises, but through proof.

3. Rollback and Safety Mechanisms

When the system does act, it acts cautiously.

Execution Agents are designed with built-in rollbacks: if a change degrades performance, they revert automatically.

Cisco has also discussed blast-radius limits—guardrails that prevent any AI-initiated change from affecting more than a small portion of the environment without human sign-off.

In other words, autonomy comes with airbags.

4. Continuous Agent Performance Monitoring

Cisco’s approach treats agent performance like network performance—something measurable.

They’re developing dashboards that track:

- Percentage of accurate anomaly detections

- Frequency of false positives or missed issues

- Accuracy of root cause identification

- Success rate of executed or recommended remediations

These metrics turn “trust” into a KPI.

Teams can decide how much autonomy to grant based on data, not gut feeling.

The Philosophical Question

Here’s the harder thought experiment:

If an AI agent could fix 80% of incidents autonomously but occasionally make a serious mistake on the remaining 20%, would you still use it?

There’s no universal answer. It depends on your risk tolerance, the sensitivity of your systems, and how much control you’re willing to share.

Cisco’s graduated autonomy model acknowledges this spectrum—it lets you start conservatively, observe results, and advance only as the AI earns confidence through performance.

That’s the pragmatic path. It recognizes that network and security operations are inherently cautious professions—for good reason.

But it also sets the stage for what comes next: proof that this trust model can hold up in real production environments.

Amsterdam Preview: What I’m Watching For

Cisco’s AgenticOps story is compelling—but at some point, the narrative has to meet reality.

At Cisco Live Amsterdam 2026, credibility will hinge on what’s running in production, not what’s on slides.

Here’s what I’ll be watching for to tell whether this is real progress or just another round of AI theater.

1. Live Demonstrations with Real Data

Not polished keynotes. Not simulated dashboards.

I want to see AgenticOps and AI Canvas operating on actual customer telemetry in real time.

That means:

- An agent detecting an issue as it happens

- The Deep Network Model performing live cross-domain correlation

- Root cause analysis that includes its reasoning

- A remediation plan with risk and rollback steps clearly explained

- (Bonus points) A customer on stage validating that this isn’t a demo lab

If Cisco shows this level of transparency, it will mark a genuine turning point—proof that the architecture works beyond marketing.

2. Customer Adoption and Autonomy Levels

How many customers are actually running AgenticOps agents today, and at what levels of autonomy?

I’m looking for a breakdown something like:

- Level 1 (Observe): The majority, validating insights before action

- Level 2–3 (Recommend / Assisted Execution): Early adopters proving accuracy and workflow fit

- Level 4 (Autonomous Execution): Any brave enterprise willing to let the AI act independently

That distribution will reveal whether this is an early-adopter experiment or the start of mainstream adoption.

3. Accuracy and Reliability Metrics

If Cisco is confident, they’ll publish numbers—how often the agents are right, how often they aren’t.

Key questions:

- What’s the false-positive rate for anomaly detection?

- How accurate is automated root-cause correlation?

- What’s the success rate of AI-recommended remediations?

Transparency here would set Cisco apart.

If the numbers are strong, it will validate the architecture.

If they’re vague or withheld, it signals the tech is still maturing.

4. Integration Depth

Cross-domain reasoning only works if telemetry truly unifies.

So I’ll be looking for evidence that the Deep Network Model is pulling live data from Splunk across domains—not just hitting APIs but operating through the actual data fabric.

Key test: Can it correlate ThousandEyes, AppDynamics, XDR, and Catalyst telemetry together in real time?

If yes, we’re seeing real integration.

If no, the vision’s still in assembly.

5. Competitive Reality Check

Cisco isn’t alone here.

ServiceNow, Datadog, Palo Alto, and Juniper are all chasing similar “AI-for-Ops” territory.

The question is whether Cisco’s approach—domain-specific AI trained on unified telemetry—proves more actionable than the generalized or dashboard-driven alternatives.

I’ll be watching how clearly Cisco differentiates AgenticOps from the rest of the pack.

6. Trust and Control in Practice

The final test is governance:

How easily can operators adjust autonomy levels, override agent actions, and review decision history?

If the trust-control interface is well-designed—simple, auditable, and reversible—it’s a sign Cisco understands operational reality.

If it feels bolted on or opaque, adoption will stall fast.

The Litmus Test

If Cisco can demonstrate real, live, cross-domain automation—root-cause detection, validated reasoning, and safe execution—with customers on stage willing to vouch for it, then AgenticOps moves from concept to credibility.

If not, it remains what it is today: a promising architecture waiting for proof.

Either way, Amsterdam will be the moment of truth.

That’s where we’ll find out whether this story is still vision—or the start of a genuine shift in how networks run themselves.

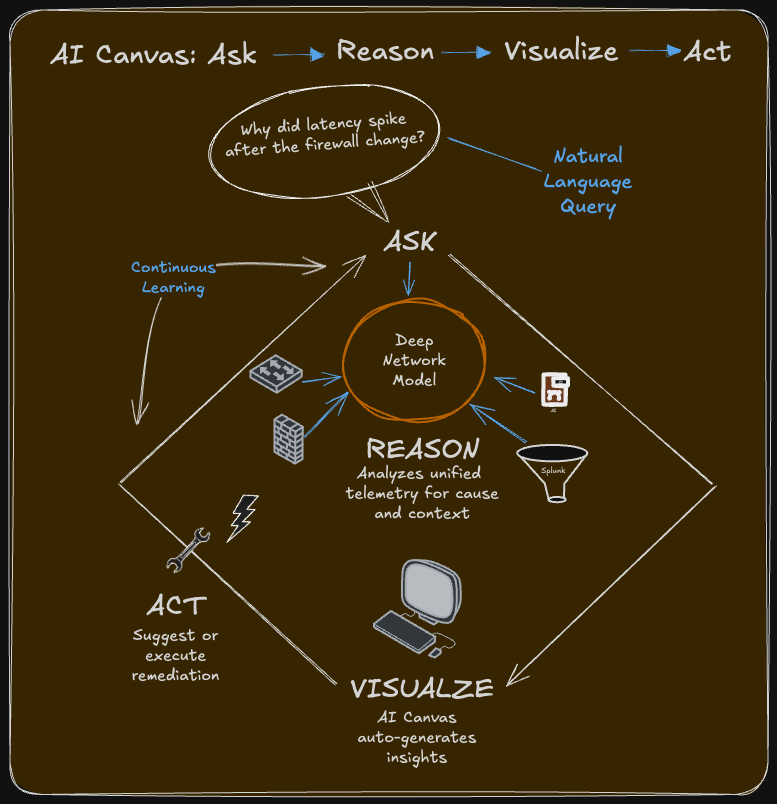

How This Fits the Transformation Puzzle

Step back for a second. AgenticOps isn’t a standalone feature — it’s the visible layer of a much larger architecture.

Each component in Cisco’s stack exists to feed or inform the next. Telemetry flows up; intelligence flows down. Remove one layer, and the system collapses.

The Stack That Makes AgenticOps Work

- Silicon One → high-performance networking silicon that generates rich, granular telemetry.

- Hypershield → security enforcement data from distributed agents, DPUs, and switches.

- Splunk → the unifying data fabric that normalizes and correlates telemetry across domains.

- Deep Network Model → domain-specific AI trained on that unified data to understand cause and effect.

- AgenticOps agents → the operational layer that detects issues, reasons about causes, and recommends or executes fixes.

- AI Canvas → the human interface where insight becomes action — the control surface for everything above.

It’s a tightly coupled stack.

AgenticOps without Splunk is just a chatbot with no context.

Splunk without the Deep Network Model is data without intelligence.

The Deep Network Model without AgenticOps is intelligence with no way to act.

Integration is the story.

And whether Cisco can make these layers function as one — seamlessly, in real environments — is the question Amsterdam will answer.

The Bottom Line

Here’s what Cisco is really saying with AgenticOps and AI Canvas:

The next era of network and security operations won’t rely on humans manually correlating data across tools.

It will rely on AI agents that understand your environment because they’re trained on your own telemetry, unified through a shared data fabric.

The technology bet — domain-specific LLMs trained on correlated network, security, and application data — is credible.

The architecture bet — graduated autonomy, explainability, and built-in validation — shows real maturity.

The execution bet — that the Deep Network Model will be accurate enough and the Splunk integration deep enough to enable safe, autonomous operations — remains to be proven.

But the direction is right.

AI for IT operations only works if it understands your infrastructure, not just generic networking theory.

Generic AI lacks context.

Domain-specific AI trained on unified telemetry can finally deliver it.

If Cisco executes well, this won’t just reduce MTTR or improve efficiency — it could fundamentally change how infrastructure is managed, shifting from reactive troubleshooting to proactive intelligence that prevents incidents altogether.

That’s not incremental.

That’s transformational.

Now it’s time to see if it works outside the demo stage.

Your Take

Are you already experimenting with AI-driven operations in your environment?

How do you navigate the trade-off between autonomy and control — would you trust an AI agent to act automatically once it’s proven accurate, or does human oversight remain non-negotiable?

For those in network or security operations: does this idea of domain-specific AI trained on unified telemetry solve real pain points, or is it adding AI complexity to workflows that already work?

And the bigger question — the one that matters most — what would it take for you to move from Level 2 (AI recommends, human executes) to Level 4 (AI executes, human reviews)?

Is that decision driven by metrics, or by philosophy?

I’ll be looking for answers in Amsterdam.

If you’re going — or already running AI Canvas or AgenticOps in production — I’d love to hear what you’re seeing.

Sources and Further Reading:

Cisco Official Resources:

- DevNet Dan - AI Canvas and Deep Network Model at Cisco Live

- Cisco Blog - WebexOne 2025: Connected Intelligence

- UC Today - Inside Cisco's AI Canvas with Amit Barave

Related Coverage:

Next in the series: Secure Connect and SASE Evolution—how Cisco's approach to secure access service edge matured from "our way or the highway" to "we'll meet you where you are," and why pragmatism might be the smartest competitive strategy.

About this series: I'm building toward Cisco Live Amsterdam in February 2026 by making sense of Cisco's biggest strategic moves. This is part learning exercise, part knowledge sharing. I'll be hosting the Cisco Live broadcast again this year, and I want to show up with a clear understanding of the storylines Cisco's building. If something here resonates—or if you think I'm missing the mark—let's talk about it.